Why AI-Written Blog Posts Are Not Ranking in Google

AI-written blog posts not ranking in Google was the exact problem I faced before I even realized the same pattern was playing out daily at Mitu & Seema Bakery. This pattern explains exactly why AI-written blog posts not ranking in Google is becoming a common experience for many bloggers today.

(This article is written while observing the same pattern play out daily at Mitu & Seema Bakery—new ideas every morning, constant changes, and the same confusion about why effort doesn’t translate into results. The situations may look simple, but the lessons behind them are not.)

Every morning at Mitu & Seema Bakery begins with a new problem that sounds logical in their heads but never fixes the real issue. One day Mitu believes the bakery board font is outdated. The next day Seema wants to rewrite the “About Us” section because some AI tool said emotional words attract customers. They change things daily. New captions. New offers. New ideas. But the counter stays empty. Customers don’t suddenly appear just because words changed. Standing there and watching this routine repeat felt uncomfortable because I had lived the same cycle while building Peplio—publishing AI-written blogs regularly, convinced consistency and optimization would eventually force Google to notice. It didn’t.

I wasn’t lazy. I wasn’t careless. I followed SEO rules. I used keywords. I wrote long posts. Still, rankings refused to move. That confusion—“I’m doing everything right, so why isn’t it working?”—is exactly where most AI-written blog strategies break down.

This article is not written to attack AI. I use AI daily. This is written to explain, from direct experience, why AI-written blog posts fail to rank in Google when used the wrong way, what Google is actually reading beneath the surface, and what changed once I stopped letting AI think on my behalf.

Peplio Reality Check

AI-Written Blog Posts Not Ranking in Google: What I Actually Experienced

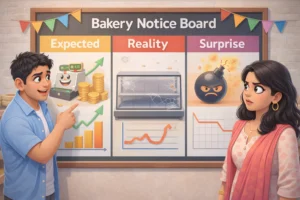

This reality check comes directly from my experience with AI-written blog posts not ranking in Google, despite doing everything “right” on paper. When people talk about AI-written blog posts not ranking in Google, they often assume penalties or algorithm hate. In my case, AI-written blog posts not ranking in Google meant something quieter—posts were indexed, visible, and technically fine, yet stuck without growth.

-

What I expected

I believed AI-written articles would rank faster because they were structured, optimized, and followed SEO best practices. -

What actually happened

Most posts indexed quickly but stalled. Impressions stayed low. Clicks were inconsistent or zero. -

What surprised me

Even detailed AI articles behaved like low-trust content once Google tested them against real user signals.

The First Big Mistake: Assuming Google Ranks Information

Most bloggers still believe Google ranks information. That belief made sense years ago. Today, it’s outdated.

Google doesn’t rank information anymore. Information is abundant. AI made it infinite. What Google ranks now is decision credibility.

AI can explain what something is.

AI struggles to explain why someone chose one path and rejected another.

This is the same mistake I kept seeing at the bakery—answers everywhere, but no real decisions changing behind the counter.

That difference sounds small until you look at ranking behavior.

When I compared my AI-written Peplio articles with my manually written ones, the difference wasn’t language quality. It was absence of choice. AI content presents conclusions as if they were inevitable. Human content exposes hesitation, uncertainty, failed routes, and trade-offs.

Google sees that.

Why “Humanizing AI Content” Doesn’t Work

Most advice around AI blogging goes like this:

-

Add personal tone

-

Rewrite introductions

-

Change sentence structure

-

Run it through detectors

I tried all of it.

I edited paragraphs heavily. I added personal phrases. I broke symmetry. I removed obvious patterns. But rankings didn’t improve.

That’s when I realized something uncomfortable:

Editing language doesn’t add experience.

Experience lives before writing begins. Editing happens after thinking is already done. AI content fails because it skips the thinking phase entirely.

This is the same mistake Mitu keeps making—decorating the bakery while ignoring the recipe.

Google Is Testing AI Content in Phases (What I Observed)

This slow suppression cycle is one of the clearest reasons AI-written blog posts not ranking in Google feel invisible rather than penalized.

Through Search Console, I started noticing a pattern:

-

AI-written posts index quickly

-

Google gives them light impressions

-

Engagement stays shallow

-

Rankings flatten

-

Page quietly disappears from SERP growth

No penalties. No warnings. Just silence.

Google doesn’t punish AI content. It withholds trust.

This is critical. Most bloggers think failure means penalty. In reality, failure usually means indifference.

When I was trying to understand why pages were getting indexed but never moving, I stopped guessing and started checking site-level signals properly. This is exactly why I built a small diagnostic tool on Peplio—to quickly see whether the issue is content depth, structure, or trust signals rather than indexing itself. I still use it before deciding whether a post needs rewriting or deeper changes.

The Missing Signal: Cost of Failure

AI never waits. Humans do. And waiting leaves marks—on thinking, on writing, and eventually on rankings.

When I made early SEO decisions for Peplio, I lost weeks on strategies that didn’t work. That delay forced reflection. That reflection changed my approach. That change altered future content.

AI never experiences delay. It never waits months for results. It never sits with uncertainty.

Google tracks that through:

-

updates

-

revisions

-

internal linking behavior

-

pacing of content changes

-

engagement stabilization

AI content arrives finished. Humans arrive uncertain.

Google trusts uncertainty more than polish.

Decision-Based Writing vs Explanation-Based Writing

This is the core difference.

AI writes explanations.

Humans document decisions.

Explanation answers questions.

Decisions reveal judgment.

Judgment is what Google is trying to measure.

When I rewrote Peplio content to focus on why I didn’t do what everyone suggested, engagement improved—not instantly, but steadily.

Google doesn’t want perfect answers. It wants earned answers. This difference is why AI-written blog posts not ranking in Google often fail to build trust beyond the first test phase.

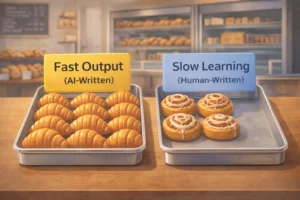

Peplio Experiment #1: AI-Written vs Decision-Written Articles

Goal

Test whether articles built around real decisions outperform AI-written explanations.

Action

I published two types of content:

-

AI-generated, heavily edited articles

-

Human-written articles starting from confusion and failure

AI helped with structure only after decisions were documented.

Result

AI-written posts indexed faster but plateaued.

Decision-written posts grew slower but held rankings longer.

What I’ll change next

Reduce AI involvement in reasoning completely. Use it only to clarify language after thought is complete.

This experiment confirmed for me that AI-written blog posts not ranking in Google is more about missing decision signals than missing optimization.

I’ve seen this same pattern outside blog content as well. While documenting growth experiments for Peplio, I noticed that strategies driven by observation and adjustment consistently outperformed clean, automated approaches. One detailed Peplio case study- Peplio social media growth case study breaks this down clearly, showing how slow decisions and real feedback shaped sustainable results over time.

Why EEAT Is Not a Checklist (And AI Treats It Like One)

AI can mention experience. It can’t produce experience.

EEAT isn’t about saying:

-

“I tested this”

-

“I analyzed data”

-

“I have experience”

EEAT is about evidence leaking naturally into writing.

When I mentioned Search Console data casually—without screenshots, without proving anything—those sections performed better than polished, proof-heavy AI paragraphs.

Why? Because humans mention proof accidentally. AI mentions proof intentionally. Treating EEAT as a checklist is another reason AI-written blog posts not ranking in Google continue to struggle.

Google knows the difference.

This idea of earning trust slowly instead of chasing shortcuts also applies strongly to organic traffic. I’ve explained this in detail elsewhere on free traffic with SEO for new blogs, especially how new blogs can grow without relying on automation or volume publishing, by focusing on signals Google actually respects.

The Time Dimension AI Cannot Fake

Some lessons only appear after weeks of silence—both in Search Console and in a quiet bakery

One of the strongest ranking signals I noticed was content evolution.

Human articles:

-

change opinions

-

contradict earlier versions

-

update assumptions

-

reference past mistakes

AI articles:

-

stay frozen

-

remain confident forever

-

never age

Google values content that changes its mind.

That’s a brutal disadvantage for AI-only blogs.

The Illusion of Productivity (A Peplio Mistake)

Publishing more feels productive. It isn’t.

I published multiple AI articles weekly at one point. Traffic didn’t grow. Authority didn’t grow. Crawl budget didn’t help.

Then I slowed down.

I rewrote existing articles instead of adding new ones. I documented failures instead of hiding them. Rankings stabilized.

More content ≠ more value.

What This Article Will NOT Do

This article will NOT:

-

say AI is banned

-

promise ranking hacks

-

tell you to abandon AI

-

give shortcuts

There are none.

If you’re a solo blogger with no audience, no money, and only a laptop…

…then AI looks like leverage. I felt that pressure deeply. Writing faster feels like progress. But Google doesn’t reward speed anymore. It rewards signal density—how much thinking exists per paragraph.

One strong human article beats ten clean AI articles because it creates something Google can’t replicate.

Why Competitive Niches Kill AI Blogs First

Low-competition niches tolerate AI content longer. Competitive niches don’t.

Why? Because when many pages explain the same thing, Google has to rank based on judgment quality.

AI repeats consensus. Humans diverge.

Consensus rarely ranks first.

🧪 Peplio Experiment #2: Updating AI Content vs Rewriting From Scratch

Goal

See if updating AI articles improves rankings.

Action

I updated old AI articles with better structure and clarity. Separately, I rewrote one article completely from experience.

Result

Updated AI articles barely moved. Rewritten article slowly gained impressions.

What I’ll change next

Rewrite instead of repair.

Why Editing AI Content Feels Productive but Isn’t

Editing feels like work. But it avoids thinking.

The hard part is deciding:

-

what not to include

-

what advice didn’t apply

-

what failed

AI avoids that discomfort. Humans shouldn’t.

Questions I Struggled With While Building Peplio

-

Why does Google test and abandon pages silently?

-

Is originality about words or decisions?

-

How much AI is too much?

-

Why do some posts age better than others?

-

Can small sites still win?

I don’t have all answers. But I now ask better questions.

The Peplio Rule on AI Content

Use AI to:

-

structure

-

summarize

-

clarify

Never let AI:

-

decide

-

judge

-

conclude

That boundary changed everything.

What I’m Testing Next

I’m testing:

-

fewer posts

-

deeper updates

-

visible opinion shifts

-

documented failures

I want Google to see thinking over time.

How This Changed Things at Mitu & Seema Bakery

After all this, I stopped treating the bakery like a metaphor and actually applied the same thinking there. Instead of rewriting boards or chasing new captions, I asked Mitu and Seema one simple question: what’s the one decision we’re avoiding because it feels slow? The answer was uncomfortable but obvious. They were changing messages daily without ever waiting long enough to see what worked. So we froze everything—no new offers, no new captions, no redesigns—and focused on one experiment only.

We picked a single change, tracked it quietly, and decided not to touch anything for weeks. At first, nothing happened. That silence made them nervous, just like Search Console silence used to make me panic. But slowly, patterns started showing up. A few repeat customers. Familiar faces. Not a rush, not viral growth—just enough to tell us we were finally doing something right.

The bakery didn’t transform overnight. But the confusion stopped. Mitu stopped blaming the board. Seema stopped chasing every new idea. And that calm clarity—the same feeling I now look for in Peplio analytics—was the real result. Not growth, not numbers, but confidence that the next decision would be based on learning, not guessing. The same thinking that explained AI-written blog posts not ranking in Google also helped reduce confusion at the bakery—by slowing decisions instead of chasing surface fixes.

One Action for You

Before publishing your next blog post, ask:

“What decision did I make here that AI wouldn’t?”

If you can’t answer that, don’t publish yet.

AI didn’t kill blogging.

It exposed shallow blogging.

And that’s exactly why AI-written blog posts are not ranking in Google.

Questions I Struggled With While Building Peplio

1. Can AI-written blog posts rank in Google at all?

Yes, they can rank, especially in low-competition niches. But ranking temporarily and sustaining rankings are two different things. In my case, AI-written posts indexed fast but stalled once Google tested them against real user behavior and competing content with experience signals.

2. Is Google penalizing AI-generated content?

From what I’ve observed, Google is not penalizing AI content directly. There was no manual action, no warning. Instead, Google simply doesn’t trust it enough to push it further. The content stays indexed but doesn’t grow.

3. How does Google know a blog post is AI-written?

I don’t think Google is “detecting AI” the way tools claim. What Google does detect is the absence of human signals—no hesitation, no failed paths, no opinion shifts, no content evolution. That pattern shows up very clearly in AI-only blogs.

4. Is editing AI-written content enough to make it rank?

In my experience, no. Editing improves language, not thinking. I edited multiple AI articles heavily, but rankings didn’t improve. Once I rewrote from actual decisions and failures, results slowly changed.

5. How much AI usage is safe for blogging?

I no longer think in terms of “percentage of AI usage.” Instead, I ask: Who made the decision here—me or the AI?

If AI decides the structure, angle, and conclusion, that’s risky. If AI helps after decisions are made, that’s usually safe.

6. Should I delete old AI-written blog posts?

I didn’t delete mine. I either:

-

rewrote them completely from experience, or

-

left them as they were and focused on stronger content

Deleting didn’t feel necessary. Rewriting mattered more.

7. Why do AI articles index fast but don’t rank?

Indexing is technical. Ranking is behavioral. AI articles satisfy crawlers quickly but often fail to satisfy user engagement and trust signals once tested in SERPs.

8. Does Google prefer human-written content?

Google prefers useful, experienced, trustworthy content. Right now, humans are much better at providing that. If AI ever gains real experience (which it can’t), the situation might change—but today, human judgment still wins.

9. Are long AI-written articles better than short ones?

Length didn’t help me. I had long AI articles that still failed. Depth comes from decisions, not word count. A shorter article with lived experience outperformed longer AI explanations.

10. Can AI-assisted content rank if the ideas are original?

Yes. This is what I’m doing now. I think, decide, test, fail, and then use AI to help structure or clarify. In that setup, the content remains mine, and AI stays a tool—not the author.

11. Why do AI blogs feel “complete” but still fail?

Because they answer questions without tension. There’s no risk, no uncertainty, no trade-off. Real blogs feel slightly uncomfortable because they expose what didn’t work. Google seems to reward that discomfort.

12. Should beginners avoid AI completely?

No. Beginners should avoid letting AI think for them. Using AI to speed up typing is fine. Using it to replace learning is dangerous.

13. Is AI content bad for brand building?

If overused, yes. Readers may not immediately notice, but over time, everything sounds the same. That sameness kills brand memory. I didn’t want Peplio to sound like every other SEO blog.

14. How long did it take to see improvement after changing approach?

Not instantly. Weeks passed with no visible change. But over time, impressions stabilized, pages stayed indexed longer, and I stopped panicking at silence. That alone was a big shift.

15. What’s the biggest mistake people make with AI blogging?

Thinking speed equals progress. Publishing faster feels productive, but Google rewards learning density, not publishing velocity.

This is why AI-written blog posts not ranking in Google is not an AI problem, but a decision-making problem.